07 December 2024

Do you own a Sony camera? Then you probably know the pain of trying to use a phone to remotely trigger the camera. I gave up on using Wifi and the official Play Memories app for that. …or Imaging Edge or whatever it is called now. For quite a while I have been carrying a primitive IR remote, when I learned that my α6400 actually can be controlled via Bluetooth. So, I created the app that I wished Sony would provide. Of course it’s free and open source.

The video is mostly about my process of going through all the options to remotely trigger my camera and, yes, I took this as an excuse to create my first “Top 5” listicle. It is a good starting point if you want to know what problem this app solves and if it is for you. This article here will go into more detail of how the app works, how to control the camera via Python, what the app can do, what it cannot do and how to automate the app from other apps like Tasker.

Please note that this article contains affiliate links, i.e. links that tell the target site that I sent you there and that earn me a share of their revenue in return, i.e. as an Amazon Associate I earn from qualifying purchases. In contrast to regular external links, such affiliate links are marked with a dollar sign instead of a box.

Before we start, for those who just want the quick overview:

In 2014 I bought a Sony NEX-5T, my first camera with exchangable lens. Since then I have been locked into the Sony eco-system of cameras. Well “locked” might sound too negative. I have switched to an α6400 since then and while I am sticking to the cheaper and more portable world of the APS-C format, I have collected quite a few lenses and accessories, which makes switching to other brands expensive and complicated (actually, the formfactor of the a7C II looks like a nice compromise to keep using light APS-C lenses on trips and use fullframe glory at home - but that price is not for me).

At the beginning Sony was one of few manufacturers who were taking mirror-less cameras seriously and definitely a solid choice. Now others have caught up and it seems like the differences are more in the details, habits of use and brand identity. I do not have an eye for which brand has the best color science2, best IBIS, best autofocus or whatever. If I had to start over I would not mind looking at the other brands, but at the same time I am happy with what I have and what Sony delivers.

Except for one thing.

Their bloody software.

The α6400 has barely seen any firmware updates, its menus are terrible and several functions exclude each other3, when I see no good reason why they should. But the worst of them all is its Wifi features. Even my old NEX-5T already had the option to transfer pictures via Wifi and remotly control the camera from my phone with a live view. Unfortunately, it sucked on the NEX-5T and it is still sucking on the α6400. It takes ages to connect to it (if connecting at all), the transfer speed is all over the place (usually slow with just enough short speed boosts to show what might have been possible) and the app feels like a patchwork of systems that barely holds together. It was bad when it was called “Play Memories”, it was bad when it was called “Imaging Edge” and frankly I cannot say if the new “Creators’ App” is any good, because for some reason my five year old α6400 is not even supported anymore - because of course it isn’t. Sony changes its Software ecosystem every few years and no need to support a camera that is still being produced.

But even if it was supported, I think that using WiFi as a remote control is not ideal. Yes, it has its benefits: The high bandwidth allows for a live preview and since it is based on a REST API, it is quite easy to control the camera through it like I did for my squirrel photo trap. But the big problem is that it takes a moment to set up and that it changes the networking setup of your phone. On older phones it would entirely replace whatever WiFi connection you have at the moment, but even on my Pixel 6, which can handle multiple WiFi connections at once, you can just tell how every app, including Imaging Edge itself, is confused by which network to use.

Because of this, I have been using a simple IR remote for a very long time, until recently I built a video booth for a friend’s wedding. He did not have the space for my massive bullet time video booth, so I wanted a minimal setup using only my α6400, a Raspberry Pi and a preview screen. So, the idea was that the guests would trigger a countdown and decide if they want to keep the result. This means that the Raspberry Pi controls video recording of the camera to capture a 5 second clip and eventually somehow shows the result of the recording to the guests and stores it depending on their decision. So, I need control of the video recording state, while showing a preview during recording and the ability to play back the recorded video clips. Which turned out to be very tricky:

| Grab video via clean HDMI | Stream via USB | Get recording via USB | Download via WiFi remote API | File transfer via WiFi-SD-Card | |

|---|---|---|---|---|---|

| USB control | No clean HDMI out with USB control during recording. | Preview stream has lower quality and rPi would have to handle preview rendering. | Video files can only be retrieved in mass storage mode. | The α6400 does not support video transfer via WiFi API and preview stream is low quality. | No clean HDMI out for preview (see grabbing clean HDMI). |

| WiFi API | Possible, but low quality compared to internal recording without a very expensive grabber. | See above, maybe not even possible with WiFi remote at the same time. | Requires manual switching to mass storage mode. | See above | Camera WiFi needs to be in AP mode and does not accept two WiFi devices, needs dual-WiFi setup. |

| Cable trigger | No recording state feedback making missed signals problematic. Also low quality as above. | See above | See above | See above | No recording state feedback making missed signals problematic. |

| IR remote | See above | See above | See above | See above | See above |

| Bluetooth remote | No extra hardware, but low quality as above. | See above | See above | See above | Hacky, but worked perfectly. |

The table shows all the combinations of remote control and retrieving recordings I know of - and all the reasons why no combination worked. I was close to once again build a setup with a primitive cable trigger or a custom IR sender4 when I accidentally found that my α6400 had another method of remote control that I did not know of: Bluetooth.

The advantage for the video booth was small, but important. No need for extra hardware as the Pi already has Bluetooth support, but more importantly the camera sends its recording state, so my software could react to it. With a simple cable remote or IR sender I could only toggle the recording state and hope that no signal is ever missed. Otherwise the camera would record only when it is not supposed to - until another signal is missed.

But the ease of using the Bluetooth control made me realize another thing: Simple triggering from a smartphone should be done via Bluetooth. You cannot get a preview stream via Bluetooth and you cannot download the picture, but that is not the point. I just want to be able to set up the camera for a family picture, take out my phone and trigger with the press of a button without having to wait - and without letting everyone else wait. And this is what we all know from Bluetooth. Take out your Bluetooth headset and they connect to your phone pretty much on the way from the case to your ears. They literally reach your ears in time for you to hear the connect-chime.

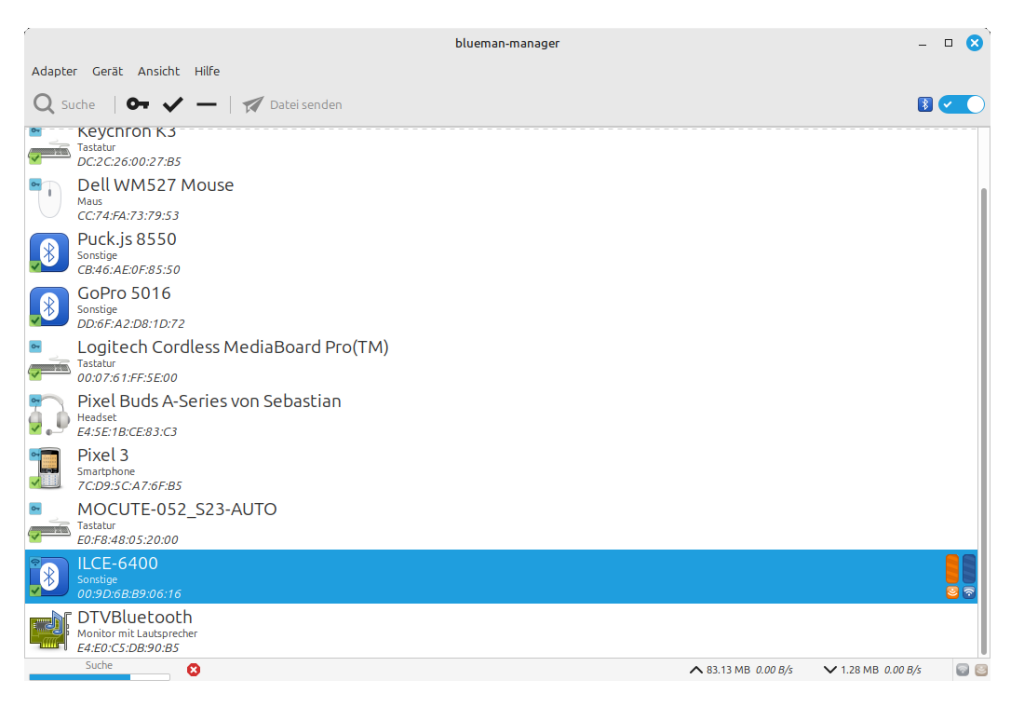

So, that’s what I created. An app that connects to your camera and shows remote control buttons in your phone’s notification area within seconds of turning it on:

Of course it also vanishes again as soon as you turn the camera off.

How do you control the camera via Bluetooth? Is there a documented API by Sony? Unfortunately not. While Sony has documented its WiFi API5, the Bluetooth API is not documented, but since it is based on Bluetooth Low Energy, it is not too hard to just look at which services and characteristics are exposed and see which characteristics can be subscribed to and what is sent by the camera. Figuring out commands to be sent to the camera can be trickier, but luckily, this work has already been done by Coral, Greg Leeds and Mark Kirschenbaum, who looked at the communication of their remote and documented what they found. Thanks a lot!

And with that info, it was quite easy to trigger the camera for my simple video booth. The tricky part about proper handling of Bluetooth Low Energy is that everything happens asynchronously and you have to implement queues, take care of service discovery, handle callbacks and be prepared for failures at any point because it is a wireless connection after all. In case of the camera you also need to handle scanning, filtering of advertisement packets and pairing.

Luckily, for a simple video booth, you know your camera and you know the one thing it is supposed to do. Even better, you are the only user, so you can take a lot of shortcuts.

So, I paired the camera with system tools, hard coded its Bluetooth address as well as the relevant characteristic’s UUID and just blindly wrote the byte sequences from Coral, Greg and Mark to the command characteristics using the Python module “bleak”, which allows me to just wait for the Bluetooth communication via asyncio. The result is just a few lines of Python code to trigger the camera. In fact, most lines simply define addresses or payload data with barely any additional logic:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

import asyncio

from bleak import BleakClient, BleakGATTCharacteristic

bleAddress = "AA:BB:CC:DD:EE:FF" # Bluetooth address of the camera

bleCmdUUID = "0000ff01-0000-1000-8000-00805f9b34fb" # UUID for command characteristic

# Camera commands, see https://gregleeds.com/reverse-engineering-sony-camera-bluetooth/

blePayloadFocusDown = bytearray([0x01,0x07])

blePayloadFocusUp = bytearray([0x01,0x06])

blePayloadTriggerDown = bytearray([0x01,0x09])

blePayloadTriggerUp = bytearray([0x01,0x08])

async def demo():

async with BleakClient(bleAddress) as client:

#Press shutter button half and then full

await client.write_gatt_char(bleCmdUUID, blePayloadFocusDown)

await client.write_gatt_char(bleCmdUUID, blePayloadTriggerDown)

#Short wait as we do not observe focus state. (You might want to observe focus and/or shutter state instead)

await asyncio.sleep(0.2)

#Release shutter button

await client.write_gatt_char(bleCmdUUID, blePayloadTriggerUp)

await client.write_gatt_char(bleCmdUUID, blePayloadFocusUp)

#Disconnect, in most real applications you would stay connected

await client.disconnect()

asyncio.run(demo())

A real application (and my video booth) should of course stay connected, automatically reconnect and maybe also subscribe to the status characteristic.

Speaking of a real application, this became a bit more complicated when implementing these features as an Android app. Now the code runs without me anywhere nearby on devices that I have never personally seen in my life - and probably never will. So, I now have to handle scanning, pairing and any kind of error caused by software, hardware and wetware. I will not go into all the details of the implementation, which is entirely open on github and it suffices to know tha BLE on Android sucks.

However, there is one important consideration that I want you to be aware of, that is the concept of “companion apps” and “companion services”. If you look at Sony’s Imaging Edge app, you will find one Bluetooth feature for geotagging. If you enable it, the app will stay active all the time waiting for you camera and whenever it is turned on, Sony’s app will notify you (including an entirely pointless vibration), write the current location to the camera and after you turn off the camera, the app stays around in your notification area in case your camera reappears.

Beautiful. Not only do I spend 95% of my day with my camera being not even in the same room, most of the time when I use it I do not require an affirmative vibration from the phone in my pocket whenever I turn it on. The reason Sony does it like this, is that for old Android versions this was the only way to automatically connect to a device when it is turned on.

Luckily, Android 8 introduced an alternative in 2017. The so-called Companion Device Manager (CDM) is a system service that allows apps to associate themselves with a specific device (with the user’s permission), making paring and connecting much easier. But, more importantly, with Android 12 in 2021 it allowed apps to observe the device’s presence and to implement a Companion Device Service. What this means is that now the CDM keeps looking for the device and it launches the app’s service as soon as the device appears. If the device is turned off (or goes out of range) the CDM terminates the service again.

That’s why my app targets Android 12 or newer. It allows my app to stay entirely inactive when you are not using your camera. Unless you normally turn off Bluetooth entirely, your phone is keeping an ear open anyway and my app does not use any extra resources. My app will only be activated when your camera is nearby and turned on and the system kills it again as soon as the camera is gone.

Ok, great, so the app plays nice with my phone’s resources and is just there when it is needed. But what does it do?

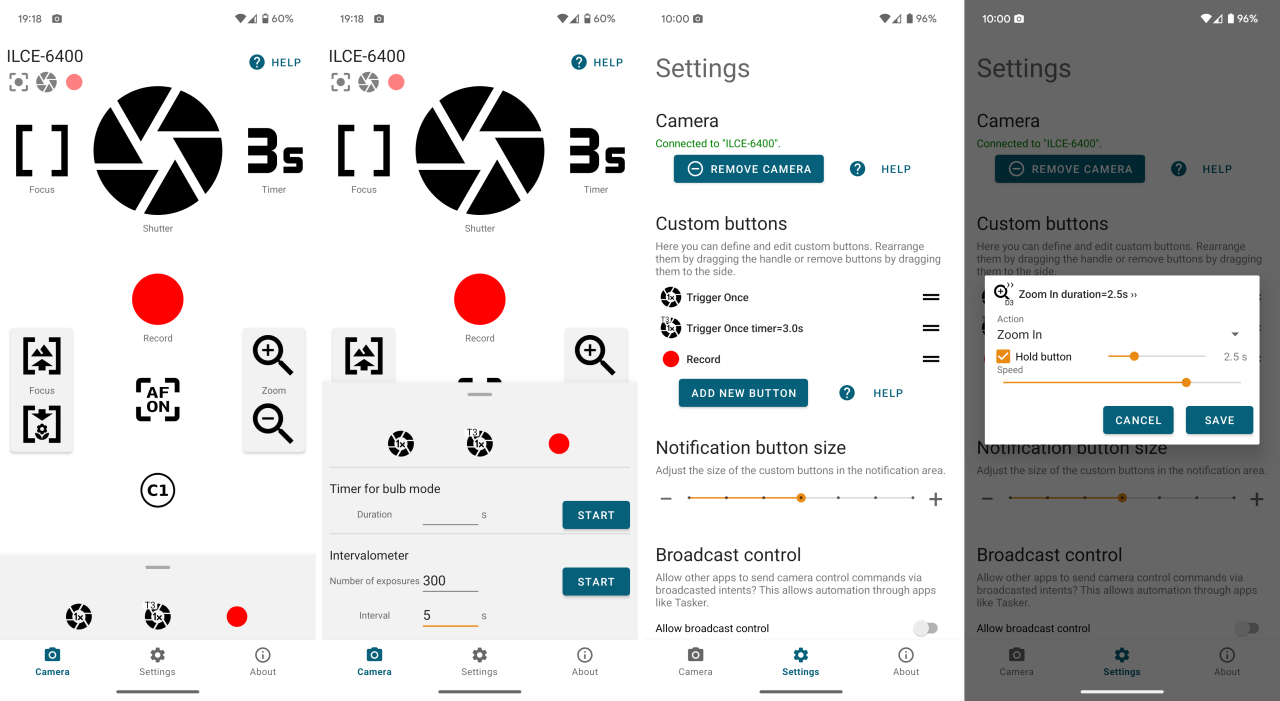

It can do anything that Sony’s remote can do, which is emulating button presses on the camera. It can press the shutter, the recording button, Zoom in/out, Focus in/out, the C1 button and the “AF On” button (that is the hold button in the AF/MF or AEL lock select switch or whatever that thing is called).

You can pick any of these buttons, add a self-timer or toggle mode if you like, and put them in your notification area. There is also a dedicated remote control and I have added some automations around these button presses, like a tool for bulb exposure and a simple intervalometer.

Unfortunately, these are just blind button presses. The camera does neither report the state of a button nor what function you might have mapped to it. My app has no idea what the “AF On” button does in your setup - it just presses it. The camera does not even report if it is in MF or any AF mode, so labels or behavior cannot be adapted accordingly. The only thing it reports is whether an autofocus has been acquired, whether the shutter is open and whether a video recording is active.

This leads to some problems even for simple triggering: The buttons in the notification area can only be tapped, but they do not distinguish how long you press them (for reasons6). So, you cannot hold the trigger until the shutter clicks and the same is true for a self-timer. The app needs to figure out how long to press the shutter.

But how does it know when to release the shutter? Well, that is a problem and I have implemented a total of three kinds of shutter that are ideal for different camera settings:

So, with all that in mind, what are the app’s limitations? Well, there is quite a list.

It lacks all the big features that cannot be done via Bluetooth: No preview image and no image transfer. It also lacks any kind of direct control over settings. It cannot set shutter time, ISO, whitebalance or anything like that, because it can only send button presses. It cannot send logical commands7.

This also applies to anything it actually can control: For example, there is no feedback from the focus in/out buttons and the amount it moves the focus depends on the lens being used. Therefore, there is no good way to implement focus bracketing. The app could in theory press the right buttons, but it cannot reliably move the focus to specific positions or even return to its original position.

So, you should think of this app as a handy small remote trigger. Like the physical remote controls (Bluetooth or IR) that you can buy from Sony. You just don’t have to carry it.

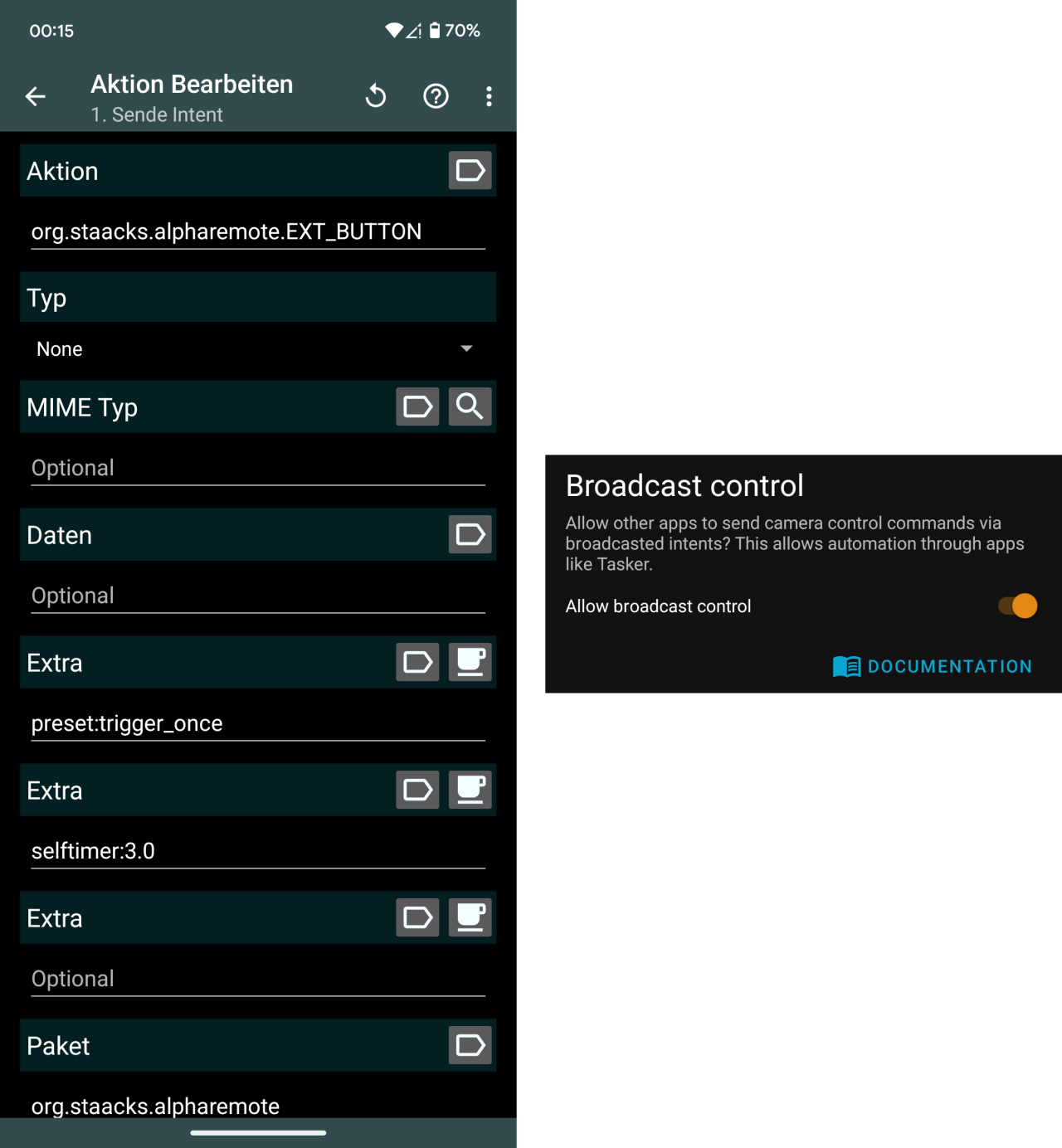

Well, there actually is one more interesting thing the app can do. In the settings view you can find an option to allow other apps to send commands via broadcasted intents. What is that? Intents are messages that apps can send to other apps. One app (or its part) might send a very specific intent to a specific different (part of an) app to trigger a function or transfer data. But an app can also listen for broadcasts that could come from any app and which may or may not be directed at a specific app. An example is your messaging app that tries to open an attached image when you tap on it. It will broadcast that it intends to open an image and any app that is able to open it may offer its service.

In case of my remote app, this will allow other apps to send an intent to trigger a command on the camera. This means that any other app may now be used as a remote trigger for your camera by going through my remote app. If you combine this with an app like Tasker, you can create any kind of automation and almost everything can become a remote for your camera. Tasker can use almost anything to start an automation and it can be configured to send a trigger intent to my app. Now anything can become a trigger. Your smartwatch, a smart motion detector, a text message to your phone - whatever you can think of. You can set Tasker to trigger your camera when your alarm clock rings on a rainy Tuesday if that’s what you want.

The setup for tasker and any similar app should not be too complicated. You need to send an intent to the package org.staacks.alpharemote with the action org.staacks.alpharemote.EXT_BUTTON.

You also need to set an “extra” with the name preset to a value that represents one of the buttons (or functions). These are:

STOPSHUTTER_HALFSHUTTERTRIGGER_ONCETRIGGER_ON_FOCUSRECORDAF_ONC1ZOOM_INZOOM_OUTFOCUS_FARFOCUS_NEAR(Note, that the app also accepts these in lower case.)

You can also set more “extras” to control optional additional parameters:

toggle should be a boolean extra that controls if the button is just pressed or toggled on/off.selftimer should be a floating point extra that adds a self-timer in seconds.duration should be a floating point extra that sets how long the button should be pressed in seconds.step is a floating point extra in the range from 0.0 to 1.0 that determines the speed of jog controls like zoom or focus.down is a boolean extra that determines if a “down” event should be part of the command. This defaults to “true” meaning that pressing the button down is part of it, but if you set it to false (and leave up as it is) you can send a key release only.up is like down, just for the up event. It also defaults to true, so by default the intent would send a button press and release. But if you set up to false, you could send a button press without releasing it.

Note that Tasker does not specifically distinguish between different data types for its “extras”, but it seems to infer them from the value. For example, in order to send a “trigger once” with a self-timer of three seconds, you would set one “extra” to preset:trigger_once and another “extra” to selftimer:3.0 to get a string extra and floating point extra, respectively. (Not selftimer:3 without the decimal point.)

Ok, that’s it. Keep in mind that this is more like a handy little remote trigger than a full-featured remote control.

If you like that and your camera supports Bluetooth remotes, get the app on Google Play or F-Droid:

Also keep in mind that I only have one camera and very few phones to test it with, so bear with me if you encounter issues and help me to fix them. Check the Readme on github for common problems and report issues on the issue tracker.

Since I primarily wrote this app because I wanted this solution, I targeted the mobile OS that I use (Android 15) and checked how far I can extend it for others without too much additional work. So, no iOS8 and no Android 11 or below9. ↩

I still don’t have a good eye for colors. Just like when I mix audio, I quickly adapt to what I am hearing/seeing and when I check what I did the next day, I really cannot believe how this sounded/looked good to me the day before. I have never been happy with the color grading of my videos and I cannot tell for the life of me where the difference in “Color science” are for those cameras. ↩

One example since we are on the topic of remotes: You can only use the Bluetooth remote, WiFi remote or IR remote and you have to manually enable one of them on entirely different settings screens. Oh, and you cannot use Bluetooth for Geotagging and as a remote control at the same time for some reason. You have to pick one. ↩

Which actualy worked perfectly with the NEX-5T on my own wedding. ↩

And deprecated, replaced and… Not entirely sure about the current state. I have a local copy of the documentation for the α6400 but could only find the new API version for the newer cameras. It’s probably like their app support: Still selling the model is no reason not to drop support for it. ↩

Damn, you are curious. Well, the reason is that the notification area is not drawn by the app, but by the system and the app hands over so-called remote views to the system, which it can draw. These remote views are much more limited than anything drawn by the app itself, but more importantly anything triggered by user interaction will not be executed in the app. Instead you also hand over a pending intent which allows the system to trigger an action in your app when a button is pressed. This does only supports a simple click (or tap), but not “down” or “up”. ↩

Actually, there are more services on newer cameras that might allow for more control. But so far, there does not even seem to be a Sony remote that uses this and my α6400 does not have this characteristic, so I cannot even do wild guesses. ↩

Some of you might know that I actually also develop an iOS app as part of my day job. But I do not use Apple devices in my free time10. ↩

When targeting older Androids, I cannot use the Companion Device Service, which was introduced with Android 12. It is possible to extend the app for older Android versions, but it will require some work and a lot of testing on older devices. If someone wants to look into it, I will be happy to see a pull request. ↩

No, I really don’t like their devices. The “it just works” philosophy ends where Apple did not expect you to go, where it is replaced by a “not possible” philosophy. Besides, Apple’s stuff just seems to break for me even when I am only doing what they expected me to do. So, I only wrote this app for Android11. ↩

I know you would pay for that. Many Apple users have told me even before I fully released it. But it isn’t that easy12. ↩

Ok, if you have to know: In order to publish an app for iOS, you need to pay for a developer account13, a Mac14 and an iPhone. But most importantly, I only invest the little spare time that my family and my full job leave me and money isn’t giving me that time back. Speaking of time, I should quit that nonsense with these footnotes15 and continue with the article16. ↩

There still is no other way to distribute apps. No reasonable way anyway. When the EU tried to force Apple to allow third-party stores they threw a tantrum and made up some insane rules that all the Apple believers now defend as if it was just to protect them. ↩

Because why should Apple allow developing apps for iOS on any other computer… ↩

Or are these endnotes? It’s the same on a single page article, isn’t it? ↩

But you also could just have stopped reading them long ago. Thanks for paying so much attention! ↩